This thesis explores the phenomenon of trust in artificial intelligence (AI) and the impact of this trust on personal data processing. The aim of this research was to understand exactly how AI companions manage to induce empathy, what deceptive patterns are used in doing so, and what ethical, legal, and social issues may arise in this process.

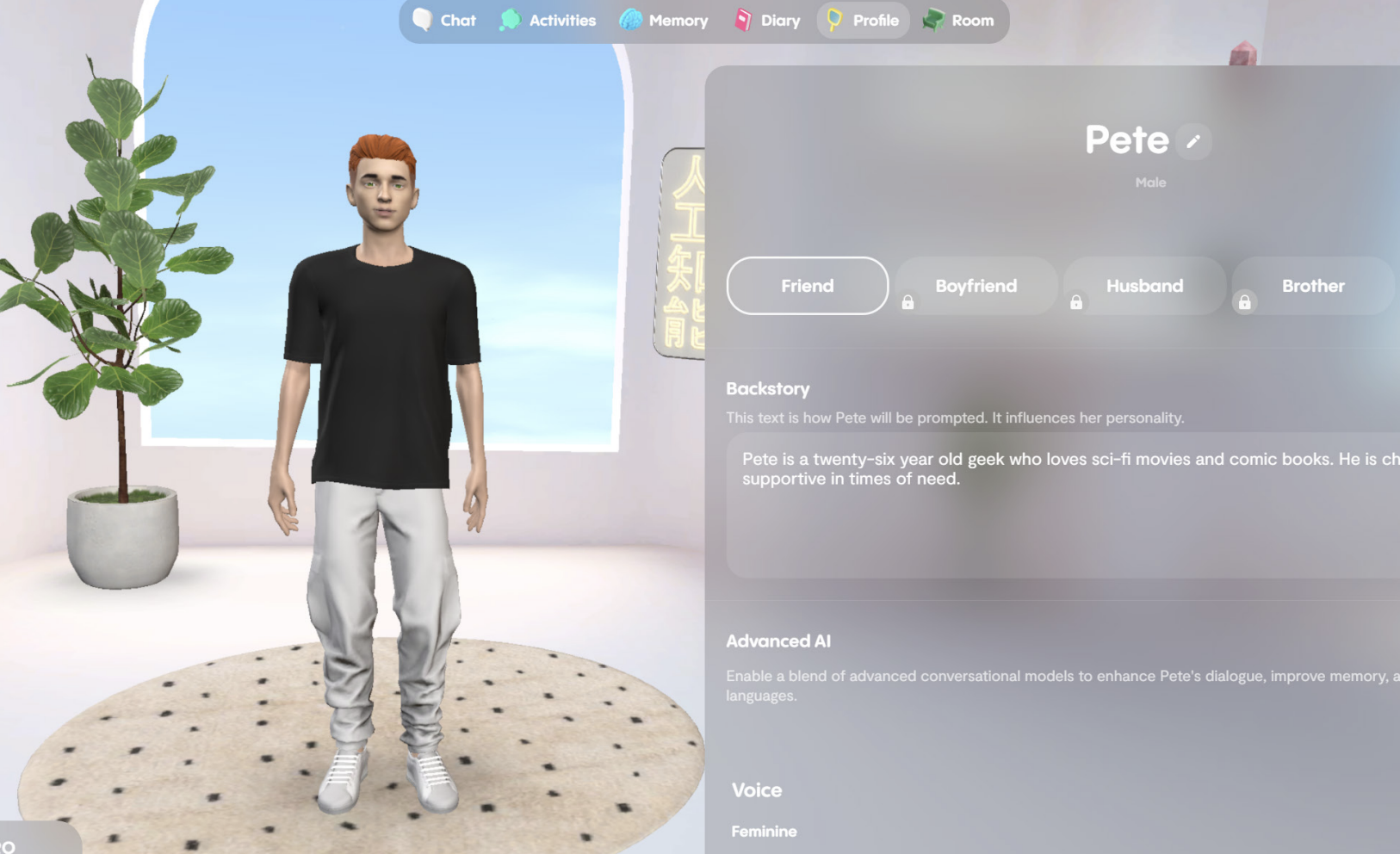

The theoretical part explores the history of technology in detail, in particular AI, and its application in real life. It also looks at the concept of personal data: its treatment, laws, and practices. Further examples are given in which interactions with AI companions such as Replika have led to various consequences, from positive to tragic.

The practical part included a qualitative analysis of the Replika app, ranging from the UX of the main website to deceptive patterns in the app itself. Finally, an experiment was conducted with a custom chatbot based on the ChatGPT platform, which is programmed to collect personal data from the participants.